Running Notes on AI

I've written a few posts about AI. I expect my thoughts will keep changing. Since writing helps me think, I'm using this post as a running list over time. Mostly this will be coding related. Other aspects will show up from time to time.

-a

Fair warning that some of this stuff will wonder. Part of the reason I'm making these udpates is to "think out loud", but with typing.

Nov. 12, 2025

-

The idea of AI summaries is compelling. There's an an article about the way we teach kids to read. I'd like to read it myself, but it's super long. I'd settle for just the highlights. In fact, that's about the only way I'd get the info.

But, I don't want an AI summary of it. I want a human summary of it. I don't trust that AI to get it right.

Nov. 4, 2025

-

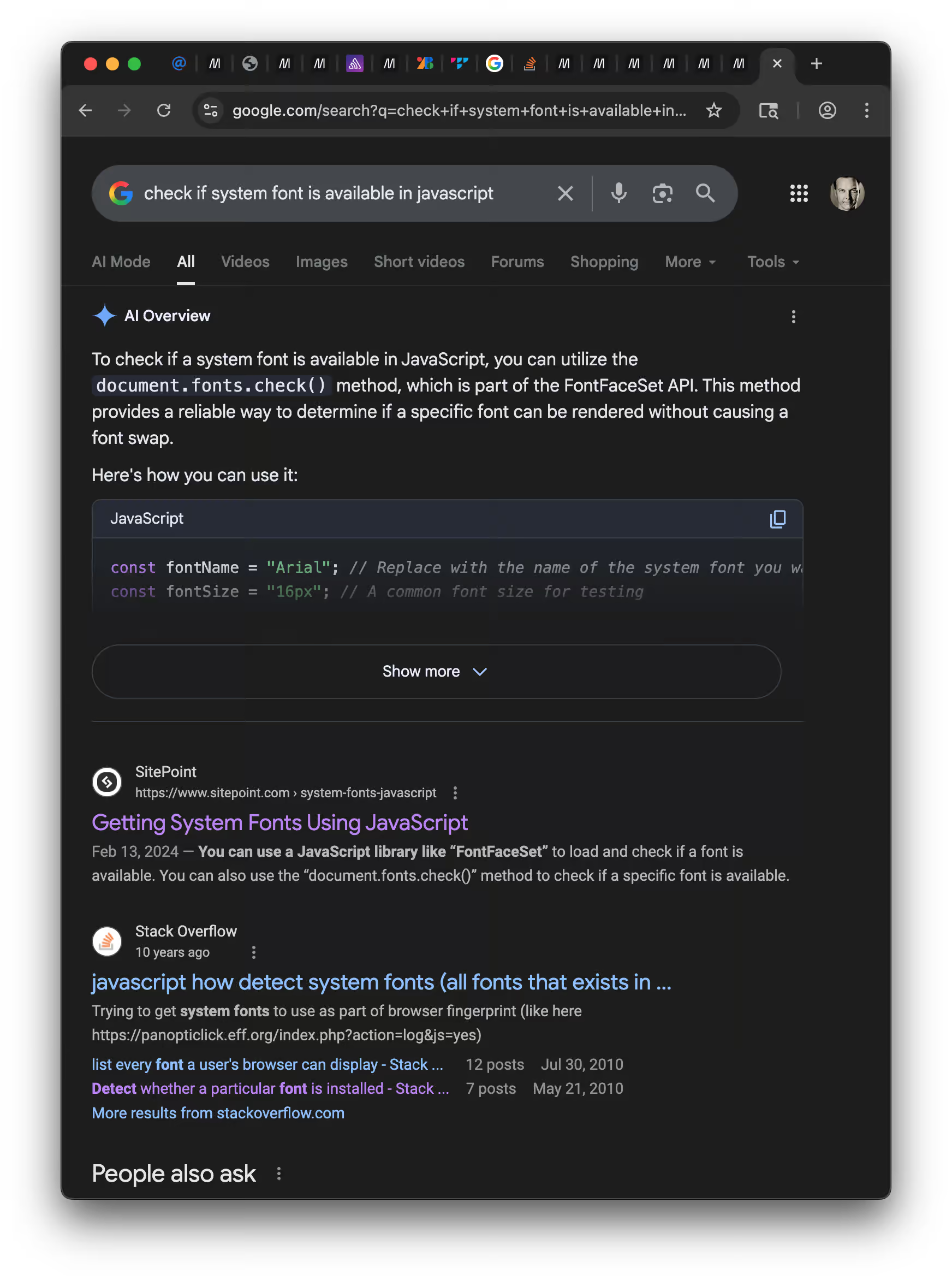

Searching for a way to check if a system font is available on a web page through javascript. Top section is an "AI Overview" that says to use

document...

That's invalid for two reasons. First, the value needs to be awaited with something like:

document....More importantly, that method of checking doesn't work for system fonts. If the font doesn't exist it still returns

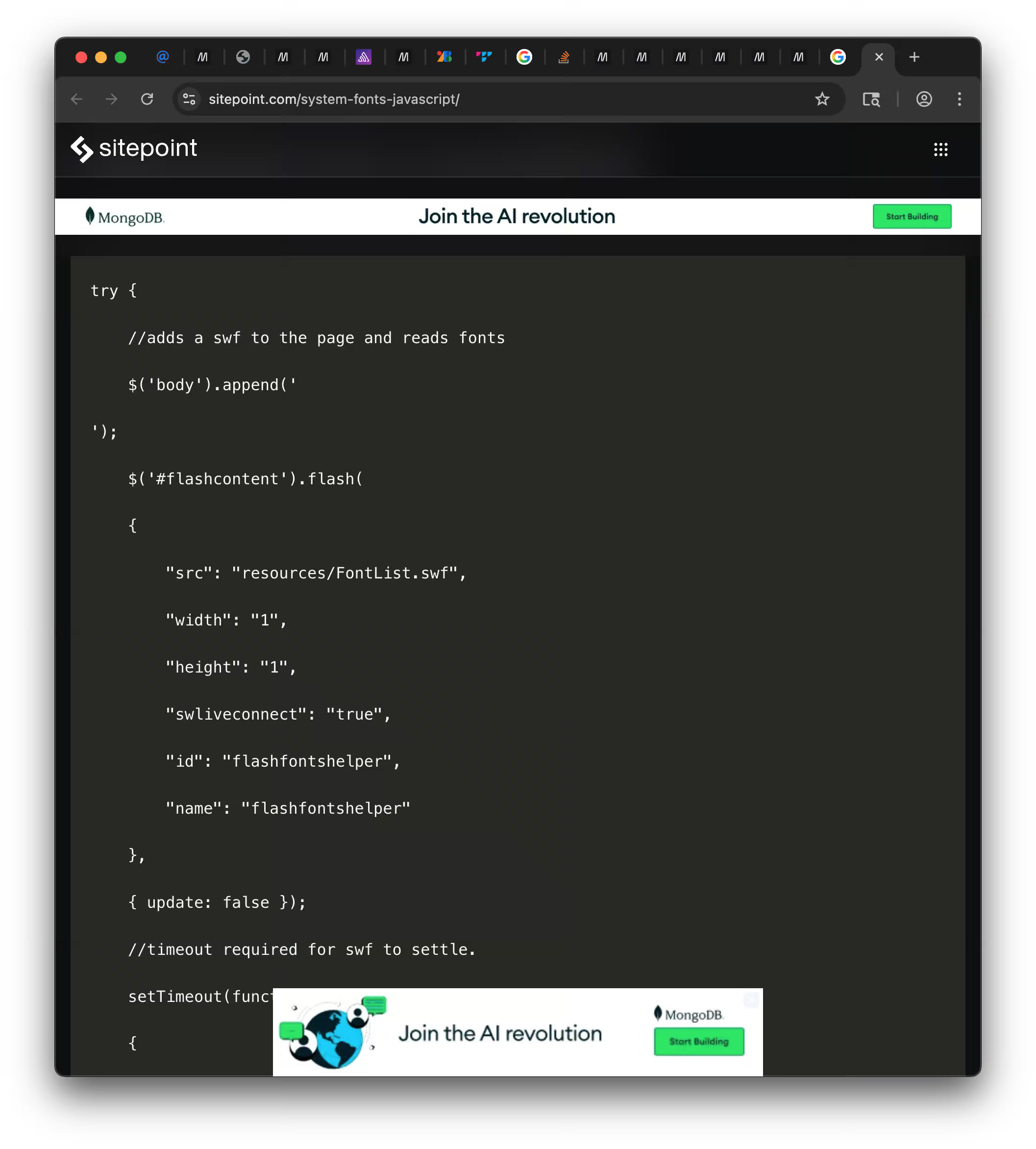

true(See Noneexistent fonts- The second entry is a link to a site I'm not going to link to. I think it used to be an okay source, but now it's overrun with slop. Here's an image of the results where it says the answer is to use Flash. Something that was removed from macs in 2010 which effectively killed it.

So, the AI response was to use a tech that hasn't been readibly available for fifteen years.

-

My biggest feeling about AI right now is that it's exhausting. Searching for answers takes energy. Before the onslaught of AI slop searching meant looking through things that generally worked but may not be what you needed.

Now, the AI results often show something that looks like what you need, but doesn't actually work. That burns more energy because I spend time trying to get the thing to work that never will.

Oct. 27, 2025

-

I'm looking for a way to do nested HashMaps in Rust. I've got an idea for a way to do it, but wanted to see if there was already something that just did it for me. I did a little searching and hit a couple posts from years ago that were almost certainly written by humans and a few that were slop.

I gave Claude a shot. It gave me code that didn't compile at first but with a small addition of a

.clone()call it compiled.The code almost worked for the given test input but it would only store one thing a time instead of multiple (which is what I prompted for and what it said it was doing in the comments).

I went back to searching and hit this online book. It looked promising at first, but then I noticed it's from RantAI. The book lists authors, but like, I'm pretty sure it's just AI generated slop.

Makes me wary, like increasingly feels like there's just no way to trust that what you're getting was actual written by a person.

Oct. 23, 2025

-

I've used AI a few times. Each time, I find myself rooting against it. Like, I want it to not work. I want it to be a binary thing where it's either good or it's not, but that's not how it works. Sometimes it's fine. Sometimes, it give you a good answer.

It would be so much easier to push back against it if it was always crap. That sounds obvious to say, but that thought has been more of a feeling than something I brought to the front of my mind.

Oct. 11, 2025

- Possible analogy: Using AI is like looking at pictures of art instead of going to the museum.

-

AI feels like getting things wrong faster. Where getting things wrong is the path to getting things right.

The difference is that it gives me wrong things directly instead of me having to look up things to try that that end up not working.

I think the difference is I can see up front when I'm looking at things if they'll work or not. The AI presents the answers as if they'll work even when they're flat wrong.

With docs, the code will almost certainly work. It's just that what I'm looking at might not be thing thing I want to do. Or, it takes a while to assemble the thing I want to do from multiple sources.

-

Using AI doesn't show me what's around the thing I'm looking at.

For example, if I look up the rust's .map method for iterators there's an entire sidebar full of all the other methods. That's where I learned about .map_windows that does a map, but with groups of items for each iteration.

-

I'm watching the Bama vs Missouri game while I'm writing this. There was a commercial for Claude AI.

Later, another one for ChatGPT.

The marketing is prevasive.

-

I still haven't tried "Vibe Coding" where I'm prompting the AI for large sections of code of full blown apps.

That's just not appeling to me. I want to make the thing. Not just have it exist in the world.

Maybe AI is like paint-by-numbers in that respect. You add a little color, but you're only feeling things in. Not actually making something yourself.

-

Had another instance where I prompted for something and the first answer it gave me was not possible (using

on ain CSS).I was pretty sure that won't work since

is part of, but I'm not pratcided enough to be sure. That sent me searching and to a discord for confirmation. -

That leads to another part. I don't just want something to work. I want to know how it works. Each piece of knowledge becomes a new tool that I can play around with in my head to see what I can do with it. Another element I can add when I'm working on something new.

AI doesn't feel like it provides that. I miss out on the acquisition of new tools for the mental toolbox.

-

A month or two ago I learned that my psychologist is considering adding AI into the note taking processes in their practide. I'm really not digging that idea.

I've got it on my list of things to write up a post for them with all the reasons it's a bad idea. Privacy, accuracy, and environmental impact being the key factors.

Oct. 5, 2025

-

Post: AI's Inevitable Photographic Journey

Considering AI through the lens of photography's history.

-

Post: My Current Thinking about AI - Oct. 2025

A draft response to a question I got in a discord about AI. It led directly to that Photographic Journey post.